The Open Compute Project Adapters

Founded by Facebook in 2011, the Open Compute Project, provides specifications for building energy-efficient and scalable high-performance Web 2.0 data centers. The results of these efforts are next-generation computing infrastructures designed and built from the ground up that are up to 24% less expensive and 38% more energy-efficient than traditional data centers. NVIDIA®, a leading OCP contributor, addresses the demanding needs of these newer data centers by providing an Open Composable Network for an end-to-end network fabric, with unmatched features for open architecture and proven, reliable performance.

Maximizing Productivity with the Highest Return on Investment

There are two possible options when it comes to network processing: consume expensive CPU resources to run sophisticated network protocol (Onload), or offload these tasks to an intelligent network adapter and allow the CPU to focus on application processing. With NVIDIA the choice becomes clear: Offloading beats onloading for improving application efficiency and reducing CAPEX and OPEX of the data center.

Enabling Open Platform with Cloud-Scale Agility

For years, networking vendors have locked down their proprietary platforms, providing little or no flexibility to the application. Freedom from vendor lock-in is central to innovating at the pace of Cloud-Scale IT, especially in Web 2.0 and Telco infrastructures. NVIDIA Open-Ethernet Spectrum switch platforms enable customers to independently choose the best hardware and the best software with support for multiple Network OS options including NVIDIA Mellanox Onyx®, Cumulus Linux, Microsoft SONiC, and Metaswitch. This brings efficiency at every level while providing customers the benefit of flexibility and choice. NVIDIA has also made significant contributions to the Open Compute Project for end-to-end network fabrics.

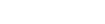

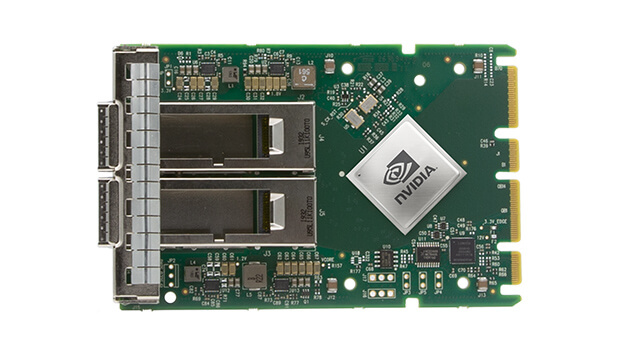

NVIDIA Adapters

ConnectX-6 Virtual Protocol Interconnect® (VPI) adapter cards offer up to two ports of 200Gb/s throughput for InfiniBand and Ethernet connectivity, provide ultra-low latency, deliver 215 million messages per second, and feature innovative smart offloads and in-network computing accelerations that drive performance and efficiency.

ConnectX-6 is a groundbreaking addition to the ConnectX series of industry-leading adapter cards, providing innovative features such as in-network memory capabilities, message passing interface (MPI) tag matching hardware acceleration, out-of-order RDMA write and read operations, and congestion control over HDR, HDR100, EDR, and FDR InfiniBand speeds.

Disaggregation Technology

The disaggregation of servers into individual compute and memory resources allows for each to be allocated as required according to the needs of each workload. This eliminates wasted resources and allows the data center to run with optimal efficiency. NVIDIA Mellanox Multi-Host technology enables connection of multiple compute or storage hosts into a single interconnect adapter. This facilitates designing and building new scale-out heterogeneous x86, Power, Arm, GPU or FPGA compute and storage resources to have direct connectivity between compute and storage elements and the network.

RESOURCES

Contact Our Team

If you need more information about our products do not hesitate to contact our dedicated team.

Contact Us