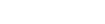

Infiniband Adapters ConnectX-6 VPI

Intelligent NVIDIA® Mellanox® ConnectX®-6 adapter cards deliver high performance and NVIDIA In-Network Computing acceleration engines for maximizing efficiency in high-performance computing (HPC), artificial intelligence (AI), cloud, hyperscale, and storage platforms.

Single/Dual-Port Adapter Supporting 200Gb/s

ConnectX-6 Virtual Protocol Interconnect® (VPI) adapter cards offer up to two ports of 200Gb/s throughput for InfiniBand and Ethernet connectivity, provide ultra-low latency, deliver 215 million messages per second, and feature innovative smart offloads and in-network computing accelerations that drive performance and efficiency.

ConnectX-6 is a groundbreaking addition to the ConnectX series of industry-leading adapter cards, providing innovative features such as in-network memory capabilities, message passing interface (MPI) tag matching hardware acceleration, out-of-order RDMA write and read operations, and congestion control over HDR, HDR100, EDR, and FDR InfiniBand speeds.

HIGHLIGHTS

PORT SPEEDS

2×200Gb/s

TOTAL BANDWIDTH

200Gb/s

MESSAGE RATE

215 million msgs/sec

PCIe LANES

32x Gen3/Gen4

USE CASE APPLICATIONS

High-Performance Computing (HPC)

ConnectX-6 delivers the highest throughput and message rate in the industry and is the perfect product to lead HPC data centers toward exascale levels of performance and scalability.

ConnectX-6 offers enhancements to HPC infrastructures by providing MPI acceleration and offloading, as well as support for network atomic and PCIe atomic operations.

Machine Learning and AI

Machine learning relies on high throughput and low latency to train deep neural networks and to improve recognition and classification accuracy. As the first adapter card to deliver 200Gb/s throughput supporting NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) , ConnectX-6 and Quantum switches provide machine learning applications with the performance and scalability they need.

Storage

NVMe storage devices are gaining momentum by offering exceptionally fast remote direct-memory access (RDMA) to storage media. With its NVMe over Fabrics (NVMe-oF) target and initiator offloads, ConnectX-6 brings further optimizations to NVMe-oF, enhancing CPU utilization and scalability.

Key Features

- HDR / HDR100 / EDR / FDR / QDR / SDR InfiniBand connectivity

- Up to 200Gb/s bandwidth

- Up to 215 M messages per second

- Low latency

- RDMA, send/receive semantics

- Hardware-based congestion control

- Atomic operations

- HDR / HDR100 / EDR / FDR / QDR / SDR InfiniBand connectivity

- Up to 200Gb/s bandwidth

- Up to 215 M messages per second

- Low latency

- RDMA, send/receive semantics

- Hardware-based congestion control

- Atomic operations

Benefits

- Delivers the highest throughput and message rate in the industry

- Offloads computation to save CPU cycles and increase network efficiency

- Highest performance and most intelligent fabric for compute and storage infrastructures

- Support for x86, Power, Arm, and GPU-based compute and storage platforms

Contact Our Team

If you need more information about our products do not hesitate to contact our dedicated team.

Contact Us