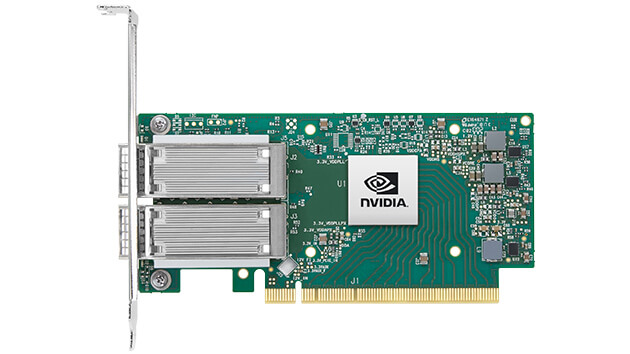

ConnectX-5 EDR 100Gb/s InfiniBand

Leveraging 100Gb/s speeds and innovative NVIDIA In-Network Computing, NVIDIA® Mellanox® ConnectX®-5 adapter cards achieve extreme performance and scale. ConnectX-5 enables supercomputers, hyperscale, and cloud data centers to operate at any scale, while reducing operational costs and infrastructure complexity.

Single/Dual-Port Adapter Supporting 100Gb/s

ConnectX-5 Virtual Protocol Interconnect® (VPI) adapter cards support two ports of 100Gb/s throughput for InfiniBand and Ethernet connectivity, low latency, and high message rate, plus PCIe switch and NVMe over Fabrics (NVME-oF) offloads, providing a high-performance and flexible solution for the most demanding applications and workloads.

HIGHLIGHTS

PORT SPEEDS

2×100Gb/s

TOTAL BANDWIDTH

200Gb/s with ConnectX-5 Ex

MESSAGE RATE

200 million messages/sec

PCIe LANES

16x Gen3/Gen4

DRIVING NEW CAPABILITIES FOR ADVANCED APPLICATIONS

High-Performance Computing (HPC)

ConnectX-5 provides exceptional networking performance for demanding HPC and deep learning workloads with message passing interface (MPI) and Rendezvous Tag Matching offloads, as well as support for adaptive routing, out-of-order RDMA data delivery, dynamically connected transport, and burst buffer offloads.

NVIDIA Mellanox Multi-Host

ConnectX-5 enhances the NVIDIA Mellanox Multi-Host® technology by enabling up to four separate hosts without any performance degradation.

Storage

ConnectX-5 offers further enhancements by providing NVMe-oF target offloads, enabling efficient NVMe storage access with no CPU intervention for improved performance and lower latency.

Key Features

- Up to 100Gb/s per port

- Total of 200Gb/s with ConnectX-5 Ex on PCIe Gen4 servers

- Support for NVIDIA Multi-Host and NVIDIA Mellanox Socket Direct® configurations

- Adaptive routing on reliable transport

- Embedded PCIe switch

- Available in a variety of form factors: PCIe standup, NVIDIA Socket Direct, OCP2.0, OCP3.0, NVIDIA Multi-Host, and IC standalone

Benefits

- Industry-leading throughput, low latency, CPU utilization, and high message rate

- Enables higher HPC performance with new MPI offloads

- Advanced storage capabilities, including NVMe-oF offloads

- Support for x86, Power, Arm, and GPU-based compute and storage platforms

RESOURCES

Contact Our Team

If you need more information about our products do not hesitate to contact our dedicated team.

Contact Us