CS7500 Infiniband Series

Today’s massive growth of data and real-time data processing demand faster, more efficient interconnects for high-performance computing (HPC), cloud, and hyperscale data centers. NVIDIA® Mellanox® CS7500 switches deliver low latency and 100Gb/s of bandwidth in an up to 28U chassis, with assured bandwidth and granular quality of service. The CS7500 series’ innovative capabilities provide high system performance, scalability, and the best network utilization.

Scaling out Data Centers

The NVIDIA Mellanox CS7500 series of smart director switches provides up to 648 enhanced data rate (EDR) 100Gb/s ports—enabling a high-performing fabric solution for HPC, AI, cloud, and hyperscale data center infrastructures in an up to 28U form factor. Its smart design provides unprecedented levels of performance and simplifies the building of clusters that can scale out to thousands of nodes. Moreover, the leaf blades, spine blades, management modules, power supply units (PSUs), and fan units are all hot-swappable, eliminating downtime.

HIGHLIGHTS

LINK SPEED

100GB/S

NUMBER OF PORTS

648EDR

MAX. THROUGHPUT

130Tb/s

CHASSIS SIZE

28U

POWER CONSUMPTION (ATIS)

6.4KW

WORLD-CLASS INFINIBAND PERFORMANCE

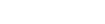

In-Network Computing

NVIDIA Mellanox Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ In-Network Computing technology offloads collective communication operations from the CPU to the switch network, improving application performance by an order of magnitude.

Self-Healing Networking

NVIDIA Mellanox InfiniBand with self-healing networking capabilities overcomes link failures and achieves network recovery 5,000X faster than any software-based solution—enhancing system performance, scalability, and network utilization.

UFM Management

NVIDIA Mellanox Unified Fabric Management (UFM®) platforms combine enhanced, real-time network telemetry with AI-powered cyber intelligence and analytics to realize higher utilization of fabric resources and a competitive advantage, while reducing OPEX.

Key Features

- Up to 648 EDR 100 Gb/s ports in a 28U switch

- Up to 130Tb/s switching capacity

- Ultra-low latency

- InfiniBand Trade Association (IBTA) specification 1.3 and 1.2.1 compliant

- Quality-of-service enforcement

- N+N power supply

- Integrated subnet manager agent (up to 2k nodes)

- Fast and efficient fabric bringup

- Comprehensive chassis management

- Intuitive command-line interface (CLI) and graphical user interface (GUI) for easy access

- Can be enhanced with UFM

- Temperature sensors and voltage monitors

- Fan speed controlled by management software

Benefits

- High ROI—energy efficiency, cost savings, and scalable high performance

- High-performance fabric for parallel computation or input/output (I/O) convergence

- Up to 648 ports for modular scalability

- High-bandwidth, low-latency fabric for compute-intensive applications

- Quick and easy setup and management

- Maximizes performance by removing fabric congestions

- Fabric management for cluster and converged I/O applications

CS7500 Series Comparison Table

| Link Speed | Ports | Height | Switching Capacity | Cooling System | Spine Modules | Leaf Modules | Interface | Management Module | Number of PSUs | |

| CS7500 | 100Gb/s | 648 | 28U | 130Tb/s | 20 fans | 18 | 18 | QSFP28 | 1–2 | 10 |

| CS7510 | 100Gb/s | 324 | 16U | 64.8Tb/s | 12 fans | 9 | 9 | QSFP28 | 1–2 | 6 |

| CS7520 | 100Gb/s | 216 | 12U | 43.2Tb/s | 8 fans | 6 | 6 | QSFP28 | 1–2 | 4 |

Contact Our Team

If you need more information about our products do not hesitate to contact our dedicated team.

Contact Us